Table of Content

HomeAnonymized Synthetic Data Generation by DP-GAN

1. Why generation of synthetic data can reserve data privacy?

Many industries from insurance companies to financial institutions to care(health)-providing agencies have access to an ocean of information (in form of structured tabular data) that can be used for making more informed decisions and recognizing new strategies and policies to not only increase their profits but also enhance the quality of their services, resulting in higher customers satisfaction. Machine learning and data mining techniques can effectively extract some data-driven insights from such tremendously large and rich datasets if they can be shared with some third-party research institutions.

Notably, the datasets usually contain an enormous amount of sensitive and personal information and mishandling them can drastically threaten the data privacy of customers. Thus, before sharing these sensitive data with any third-party research institutions, the holders of these datasets have to guarantee and preserve the privacy of their customers' information.

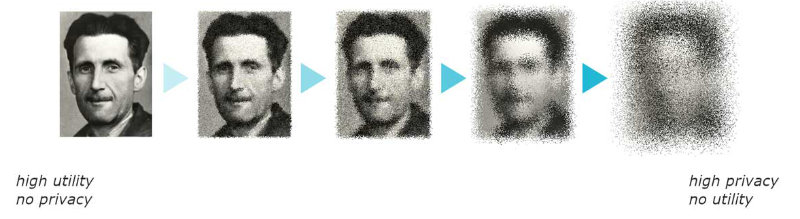

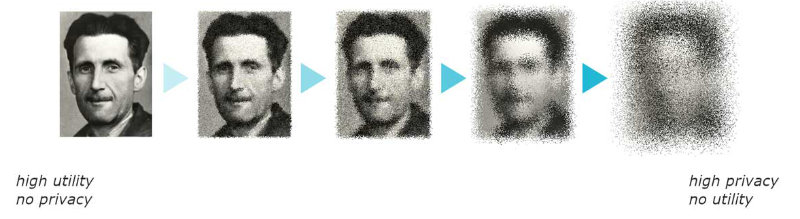

Data anonymization is one of the approaches for preserving data privacy as it aims to make de-identification attacks very hard or preferably impossible. In meantime, the anonymized data should preserve the statistical properties and the patterns existed in the original but unprotected data in order to allows ML techniques to extract and learn these patterns for a variety of tasks, such as predictive models. This leads us to a dilemma; privacy v.s. utility.

To address both these criteria (privacy v.s. utility), one requires to devise a method to generate synthetic anonymized data that I) pertains statistical properties of the original data (measured by a utility metric) while II) preserving the privacy of customers information (which can be quantified by a privacy metric, e.g. DP).

To create such synthetic anonymized data, one can either fully or partially synthetic data. In the former, all the features (attributes) of a given dataset are considered as sensitive data, thus analysts should generate fully synthetic data records to be used instead of the original data records. While in the latter, regarding some features as sensitive, the analysts tend to either synthesize values for these attributes or censor them without hurting the utility while keeping the privacy risk, e.g. identity disclosure, low.

2. MedGAN: a variant of GAN to generate data from different types

Generally speaking, vanilla GAN learns to estimate the distribution of data, then through sampling from this estimated data distribution, many data samples can be generated. As GAN is originally proposed for image datasets, where the data samples contain real-value features, and is trained regardless of any privacy measurements. However, we aim to generate privacy-preserved synthetic tabular data with categorical, discrete, binary or mixed features (data attributes). MedGAN [1] is proposed to generate such discrete-value anonymized features, particularly for medical structured dataset.

In order to generate synthetic discrete-value, the author of MedGAN incorporate an encoder-decoder. The pre-trained encoder ($Enc(\cdot)$) maps each real record represented by $\mathbf{x}\in\mathcal{Z}_{+}^{D}$ (from a $D$-dimensional discrete-value space) into a continuous feature space, then the decoder ($Dec(\cdot)$) maps it back to the discrete-value space. The generator $G(\cdot)$ takes in random prior $\mathbf{z}$ to generate continuous-value feature ($G(\mathbf{z})$), which then maps back to the discrete-value space by $Dec (G(\mathbf{z})) $. Finally, the discriminator is trained to distinguish the generated samples from the real samples.

The privacy of MedGAN's data generated has been empirically assessed by different privacy metrics~\cite{choi2017generating,goncalves2020generation}, but it is better if the generative model can be explicitly trained somehow for encouraging privacy.

3. Experiment

3.1. Dataset

We use home credit dataset, a Kaggle dataset for predicting repayment abilities of customers according to some attributes. First, the dataset is pre-processed as follows:

- Outlier removal: Outliers per attribute are detected according to their z-score (z-score>3), then removed.

- Data imputation: using mean and most-frequency strategies, missing values of the real-value and categorical attributes are imputed, respectively.

- Encoding categorical attributes : categorical attributes indicated by string are encoded to be integer type categorical data.

- Data standardization : float-type attributes are standardized to interval $[0,1]$

import os

import sys

import pandas as pd

import matplotlib.pyplot as plt

from sklearn import neighbors as sk_nn

%matplotlib inline

import numpy as np

from sklearn.preprocessing import MinMaxScaler, OneHotEncoder, LabelBinarizer, LabelEncoder

from sklearn.ensemble import RandomForestRegressor

import copy

the procedure for preparing data¶

1. load data, put "target" column as label in a separate df

2. describe() of dataframe to see the values

3. covert data into human-understandable format (negative values in "age" should be converted)

4. outliers detection (visually--box plot, histogram; analytically-- zscore, thresholding)

5. imputation of missing values and outliers

6. mean_normalization of numerical data

7. data transformation of categorical datadef plot_box(df):

i=1

plt.figure(figsize=(20,20))

for col in df:

if df[col].dtypes=='float64':

plt.subplot(int(len(df)/2),int(len(df)/2), i)

df[col].plot.box()

i+=1

def plot_nans(df):

plt.figure(figsize=(10,6))

plt.title('missing values for %d features'%len(df.columns))

plt.plot(range(len(df.columns)),df.isna().sum())

plt.ticklabel_format(style='sci', axis='y', scilimits=(0,0))

tr_data = pd.read_csv('home-credit/application_train.csv')

te_data = pd.read_csv('home-credit/application_test.csv')

print (len(tr_data), tr_data.columns )

307511 Index(['SK_ID_CURR', 'TARGET', 'NAME_CONTRACT_TYPE', 'CODE_GENDER',

'FLAG_OWN_CAR', 'FLAG_OWN_REALTY', 'CNT_CHILDREN', 'AMT_INCOME_TOTAL',

'AMT_CREDIT', 'AMT_ANNUITY',

...

'FLAG_DOCUMENT_18', 'FLAG_DOCUMENT_19', 'FLAG_DOCUMENT_20',

'FLAG_DOCUMENT_21', 'AMT_REQ_CREDIT_BUREAU_HOUR',

'AMT_REQ_CREDIT_BUREAU_DAY', 'AMT_REQ_CREDIT_BUREAU_WEEK',

'AMT_REQ_CREDIT_BUREAU_MON', 'AMT_REQ_CREDIT_BUREAU_QRT',

'AMT_REQ_CREDIT_BUREAU_YEAR'],

dtype='object', length=122)

# types of data in dataframe

print (tr_data.dtypes)

print ('===== number of each datatype in dataframe')

print (tr_data.dtypes.value_counts())

print ('=========')

print ('====== number of categories for categorical data')

print (tr_data.select_dtypes('object').apply(pd.Series.nunique, axis = 0))

SK_ID_CURR int64

TARGET int64

NAME_CONTRACT_TYPE object

CODE_GENDER object

FLAG_OWN_CAR object

...

AMT_REQ_CREDIT_BUREAU_DAY float64

AMT_REQ_CREDIT_BUREAU_WEEK float64

AMT_REQ_CREDIT_BUREAU_MON float64

AMT_REQ_CREDIT_BUREAU_QRT float64

AMT_REQ_CREDIT_BUREAU_YEAR float64

Length: 122, dtype: object

===== number of each datatype in dataframe

float64 65

int64 41

object 16

dtype: int64

=========

====== number of categories for categorical data

NAME_CONTRACT_TYPE 2

CODE_GENDER 3

FLAG_OWN_CAR 2

FLAG_OWN_REALTY 2

NAME_TYPE_SUITE 7

NAME_INCOME_TYPE 8

NAME_EDUCATION_TYPE 5

NAME_FAMILY_STATUS 6

NAME_HOUSING_TYPE 6

OCCUPATION_TYPE 18

WEEKDAY_APPR_PROCESS_START 7

ORGANIZATION_TYPE 58

FONDKAPREMONT_MODE 4

HOUSETYPE_MODE 3

WALLSMATERIAL_MODE 7

EMERGENCYSTATE_MODE 2

dtype: int64

#number of missing values per attribute

print (tr_data.isna().sum())

plot_nans(tr_data)

SK_ID_CURR 0

TARGET 0

NAME_CONTRACT_TYPE 0

CODE_GENDER 0

FLAG_OWN_CAR 0

...

AMT_REQ_CREDIT_BUREAU_DAY 41519

AMT_REQ_CREDIT_BUREAU_WEEK 41519

AMT_REQ_CREDIT_BUREAU_MON 41519

AMT_REQ_CREDIT_BUREAU_QRT 41519

AMT_REQ_CREDIT_BUREAU_YEAR 41519

Length: 122, dtype: int64

# step 1

y_tr = tr_data['TARGET']

x_tr = tr_data.drop(['TARGET','SK_ID_CURR'], 1)

x_te = te_data.drop(['SK_ID_CURR'], 1)

x_tr.describe()

| CNT_CHILDREN | AMT_INCOME_TOTAL | AMT_CREDIT | AMT_ANNUITY | AMT_GOODS_PRICE | REGION_POPULATION_RELATIVE | DAYS_BIRTH | DAYS_EMPLOYED | DAYS_REGISTRATION | DAYS_ID_PUBLISH | ... | FLAG_DOCUMENT_18 | FLAG_DOCUMENT_19 | FLAG_DOCUMENT_20 | FLAG_DOCUMENT_21 | AMT_REQ_CREDIT_BUREAU_HOUR | AMT_REQ_CREDIT_BUREAU_DAY | AMT_REQ_CREDIT_BUREAU_WEEK | AMT_REQ_CREDIT_BUREAU_MON | AMT_REQ_CREDIT_BUREAU_QRT | AMT_REQ_CREDIT_BUREAU_YEAR | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 307511.000000 | 3.075110e+05 | 3.075110e+05 | 307499.000000 | 3.072330e+05 | 307511.000000 | 307511.000000 | 307511.000000 | 307511.000000 | 307511.000000 | ... | 307511.000000 | 307511.000000 | 307511.000000 | 307511.000000 | 265992.000000 | 265992.000000 | 265992.000000 | 265992.000000 | 265992.000000 | 265992.000000 |

| mean | 0.417052 | 1.687979e+05 | 5.990260e+05 | 27108.573909 | 5.383962e+05 | 0.020868 | -16036.995067 | 63815.045904 | -4986.120328 | -2994.202373 | ... | 0.008130 | 0.000595 | 0.000507 | 0.000335 | 0.006402 | 0.007000 | 0.034362 | 0.267395 | 0.265474 | 1.899974 |

| std | 0.722121 | 2.371231e+05 | 4.024908e+05 | 14493.737315 | 3.694465e+05 | 0.013831 | 4363.988632 | 141275.766519 | 3522.886321 | 1509.450419 | ... | 0.089798 | 0.024387 | 0.022518 | 0.018299 | 0.083849 | 0.110757 | 0.204685 | 0.916002 | 0.794056 | 1.869295 |

| min | 0.000000 | 2.565000e+04 | 4.500000e+04 | 1615.500000 | 4.050000e+04 | 0.000290 | -25229.000000 | -17912.000000 | -24672.000000 | -7197.000000 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| 25% | 0.000000 | 1.125000e+05 | 2.700000e+05 | 16524.000000 | 2.385000e+05 | 0.010006 | -19682.000000 | -2760.000000 | -7479.500000 | -4299.000000 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| 50% | 0.000000 | 1.471500e+05 | 5.135310e+05 | 24903.000000 | 4.500000e+05 | 0.018850 | -15750.000000 | -1213.000000 | -4504.000000 | -3254.000000 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 1.000000 |

| 75% | 1.000000 | 2.025000e+05 | 8.086500e+05 | 34596.000000 | 6.795000e+05 | 0.028663 | -12413.000000 | -289.000000 | -2010.000000 | -1720.000000 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 3.000000 |

| max | 19.000000 | 1.170000e+08 | 4.050000e+06 | 258025.500000 | 4.050000e+06 | 0.072508 | -7489.000000 | 365243.000000 | 0.000000 | 0.000000 | ... | 1.000000 | 1.000000 | 1.000000 | 1.000000 | 4.000000 | 9.000000 | 8.000000 | 27.000000 | 261.000000 | 25.000000 |

8 rows × 104 columns

df = x_tr.copy()

df_te = x_te.copy()

# dataset contains 'XNA' as Nan; let to replace them with np.nan

df.replace('XNA', np.NAN, inplace=True)

df.replace('XNA', np.NAN, inplace=True)

# step 2: covert negative days values to postive and year format

for c in df.columns:

if c.startswith('DAYS'):

df[c]/=-365

# step 3: outlier removal

from scipy import stats

plt.figure(figsize=(20,20))

for _ in range(2):

for col in df:

if df[col].dtypes=='float':

a = len(df[col])

df.drop(index =df[col][(stats.zscore(df[col])>3)].index, axis=0, inplace=True)

b=len(df[col])

<Figure size 1440x1440 with 0 Axes>

# step 5: data imputation: replace Nans

from sklearn.experimental import enable_iterative_imputer

from sklearn.impute import IterativeImputer, KNNImputer, SimpleImputer

def imputer(df, df_te):

for col in df:

if df[col].dtypes=='float64':

# imputer = KNNImputer(missing_values=np.nan)

imputer = SimpleImputer(missing_values = np.nan)

else:

imputer = SimpleImputer(missing_values=np.nan, strategy='most_frequent')

clf = imputer.fit(df[col].values.reshape(-1,1))

imputed_values = clf.transform (df[col].values.reshape(-1,1))

df[col] = imputed_values

imputed_values = clf.transform (df_te[col].values.reshape(-1,1))

df_te[col] = imputed_values.flatten()

imputer(df,df_te)

# plot to see Nans are removed

plot_nans(df_te)

# step 6: normalization of float data

def normalization(train_data, test_data):

for col in train_data:

if train_data[col].dtypes =='float64' or train_data[col].dtypes =='object':

if train_data[col].dtypes =='float64' and len(np.unique(train_data[col]))>2:

# transform into [0,1]

f = MinMaxScaler()

f.fit( train_data[col].values.reshape(-1,1))

tr = f.transform(train_data[col].values.reshape(-1,1))

te = f.transform(test_data[col].values.reshape(-1,1))

elif train_data[col].dtypes =='object':

f = LabelEncoder()

# unseen category in test data forces me to concatenate test and train togther

f.fit( pd.concat((train_data[col],test_data[col]),axis=0))

tr = f.transform(train_data[col])

te = f.transform(test_data[col])

train_data[col] = tr.flatten()

test_data[col] = te.flatten()

normalization(df, df_te)

df.to_csv('Processed_train_house.csv')

df_te.to_csv('Processed_test_house.csv')

df.info()

<class 'pandas.core.frame.DataFrame'> Int64Index: 288352 entries, 0 to 307510 Columns: 120 entries, NAME_CONTRACT_TYPE to AMT_REQ_CREDIT_BUREAU_YEAR dtypes: float64(68), int64(52) memory usage: 276.2 MB

4. Reference

- Karim Armanious, Chenming Jiang, Marc Fischer, Thomas Küstner, Konstantin Nikolaou, Sergios Gatidis, Bin Yang, MedGAN: "Medical Image Translation using GANs", Computerized medical imaging and graphics,2020.